The History of Quality

The quality movement can trace its roots back to medieval Europe, where craftsmen began organizing into unions called guilds in the late 13th century.

Until the early 19th century, manufacturing in the industrialized world tended to follow this craftsmanship model. The factory system, with its emphasis on product inspection, started in Great Britain in the mid-1750s and grew into the Industrial Revolution in the early 1800s.

In the early 20th century, manufacturers began to include quality processes in quality practices.

After the United States entered World War II, quality became a critical component of the war effort: Bullets manufactured in one state, for example, had to work consistently in rifles made in another. The armed forces initially inspected virtually every unit of product; then to simplify and speed up this process without compromising safety, the military began to use sampling techniques for inspection, aided by the publication of military-specification standards and training courses in Walter Shewhart’s statistical process control techniques.

The birth of total quality in the United States came as a direct response to the quality revolution in Japan following World War II. The Japanese welcomed the input of Americans Joseph M. Juran and W. Edwards Deming and rather than concentrating on inspection, focused on improving all organizational processes through the people who used them.

By the 1970s, U.S. industrial sectors such as automobiles and electronics had been broadsided by Japan’s high-quality competition. The U.S. response, emphasizing not only statistics but approaches that embraced the entire organization, became known as total quality management (TQM).

By the last decade of the 20th century, TQM was considered a fad by many business leaders. But while the use of the term TQM has faded somewhat, particularly in the United States, its practices continue.

In the few years since the turn of the century, the quality movement seems to have matured beyond Total Quality. New quality systems have evolved from the foundations of Deming, Juran and the early Japanese practitioners of quality, and quality has moved beyond manufacturing into service, healthcare, education and government sectors.

From the end of the 13th century to the early 19th century, craftsmen across medieval Europe were organized into unions called guilds. These guilds were responsible for developing strict rules for product and service quality. Inspection committees enforced the rules by marking flawless goods with a special mark or symbol.

Craftsmen themselves often placed a second mark on the goods they produced. At first this mark was used to track the origin of faulty items. But over time the mark came to represent a craftsman’s good reputation. For example, stonemasons’ marks symbolized each guild member’s obligation to satisfy his customers and enhance the trade’s reputation.

*Qutote from「 Quality Handbook」 Site

The History of Quality – The Industrial Revolution

American quality practices evolved in the 1800s as they were shaped by changes in predominant production methods:

Craftsmanship

The factory system

The Taylor system

Craftsmanship

In the early 19th century, manufacturing in the United States tended to follow the craftsmanship model used in the European countries. In this model, young boys learned a skilled trade while serving as an apprentice to a master, often for many years.

Since most craftsmen sold their goods locally, each had a tremendous personal stake in meeting customers’ needs for quality. If quality needs weren’t met, the craftsman ran the risk of losing customers not easily replaced. Therefore, masters maintained a form of quality control by inspecting goods before sale.

The Factory System

The factory system, a product of the Industrial Revolution in Europe, began to divide the craftsmen’s trades into specialized tasks. This forced craftsmen to become factory workers and forced shop owners to become production supervisors, and marked an initial decline in employees’ sense of empowerment and autonomy in the workplace.

Quality in the factory system was ensured through the skill of laborers supplemented by audits and/or inspections. Defective products were either reworked or scrapped.

The Taylor System

Late in the 19th century the United States broke further from European tradition and adopted a new management approach developed by Frederick W. Taylor. Taylor’s goal was to increase productivity without increasing the number of skilled craftsmen. He achieved this by assigning factory planning to specialized engineers and by using craftsmen and supervisors, who had been displaced by the growth of factories, as inspectors and managers who executed the engineers’ plans.

Taylor’s approach led to remarkable rises in productivity, but it had significant drawbacks: Workers were once again stripped of their dwindling power, and the new emphasis on productivity had a negative effect on quality.

To remedy the quality decline, factory managers created inspection departments to keep defective products from reaching customers. If defective product did reach the customer, it was more common for upper managers to ask the inspector, “Why did we let this get out?” than to ask the production manager, “Why did we make it this way to begin with?”

The History of Quality – The Early 20th Century

The beginning of the 20th century marked the inclusion of “processes” in quality practices.

A “process” is defined as a group of activities that takes an input, adds value to it and provides an output, such as when a chef transforms a pile of ingredients into a meal.

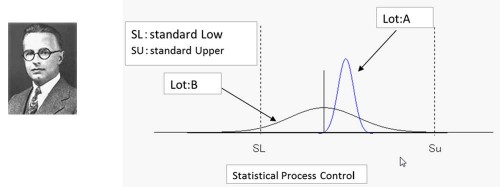

Walter Shewhart, a statistician for Bell Laboratories, began to focus on controlling processes in the mid-1920s, making quality relevant not only for the finished product but for the processes that created it.

During this period, quality became an important safety issue. Unsafe military equipment was clearly unacceptable, and the U.S. armed forces inspected virtually every unit produced to ensure that it was safe for operation. This practice required huge inspection forces and caused problems in recruiting and retaining competent inspection personnel.

To ease the problems without compromising product safety, the armed forces began to use sampling inspection to replace unit-by-unit inspection. With the aid of industry consultants, particularly from Bell Laboratories, they adapted sampling tables and published them in a military standard, known as Mil-Std-105. These tables were incorporated into the military contracts so suppliers clearly understood what they were expected to produce.

The armed forces also helped suppliers improve quality by sponsoring training courses in Walter Shewhart’s statistical quality control (SQC) techniques.

But while the training led to some quality improvement in some organizations, most companies had little motivation to truly integrate the techniques. As long as government contracts paid the bills, organizations’ top priority remained meeting production deadlines. What’s more, most SQC programs were terminated once the government contracts came to an end.

The History of Quality – Total Quality

The birth of total quality in the United States was in direct response to a quality revolution in Japan following World War II, as major Japanese manufacturers converted from producing military goods for internal use to producing civilian goods for trade.

At first, Japan had a widely held reputation for shoddy exports, and their goods were shunned by international markets. This led Japanese organizations to explore new ways of thinking about quality.

Deming, Juran and Japan

The Japanese welcomed input from foreign companies and lecturers, including two American quality experts:

W. Edwards Deming, who had become frustrated with American managers when most programs for statistical quality control were terminated once the war and government contracts came to and end.

Joseph M. Juran, who predicted the quality of Japanese goods would overtake the qualiy of good produced in the United States by the mid-1970s because of Japan’s revolutionary rate of quality improvement.

Japan’s strategies represented the new “total quality” approach. Rather than relying purely on product inspection, Japanese manufacturers focused on improving all organizational processes through the people who used them. As a result, Japan was able to produce higher-quality exports at lower prices, benefiting consumers throughout the world.

American managers were generally unaware of this trend, assuming any competition from the Japanese would ultimately come in the form of price, not quality. In the meantime, Japanese manufacturers began increasing their share in American markets, causing widespread economic effects in the United States: Manufacturers began losing market share, organizations began shipping jobs overseas, and the economy suffered unfavorable trade balances. Overall, the impact on American business jolted the United States into action.

The American Response

At first U.S. manufacturers held onto to their assumption that Japanese success was price-related, and thus responded to Japanese competition with strategies aimed at reducing domestic production costs and restricting imports. This, of course, did nothing to improve American competitiveness in quality.

As years passed, price competition declined while quality competition continued to increase. By the end of the 1970s, the American quality crisis reached major proportions, attracting attention from national legislators, administrators and the media. A 1980 NBC-TV News special report, “If Japan Can… Why Can’t We?” highlighted how Japan had captured the world auto and electronics markets. Finally, U.S. organizations began to listen.

The chief executive officers of major U.S. corporations stepped forward to provide personal leadership in the quality movement. The U.S. response, emphasizing not only statistics but approaches that embraced the entire organization, became known as Total Quality Management (TQM).

Several other quality initiatives followed. The ISO 9000 series of quality-management standards, for example, were published in 1987. The Baldrige National Quality Program and Malcolm Baldrige National Quality Award were established by the U.S. Congress the same year. American companies were at first slow to adopt the standards but eventually came on board.

The History of Quality – Beyond Total Quality

By the end of the 1990s Total Quality Management (TQM) was considered little more than a fad by many American business leaders (although it still retained its prominence in Europe).

While use of the term TQM has faded somewhat, particularly in the United States, quality expert Nancy Tague says: “Enough organizations have used it with success that, to paraphrase Mark Twain, the reports of its death have been greatly exaggerated.” (see The Quality Toolbox, ASQ Quality Press, 2005).

As the 21st century begins, the quality movement has matured. Tague says new quality systems have evolved beyond the foundations laid by Deming, Juran and the early Japanese practitioners of quality.

Some examples of this maturation:

In 2000 the ISO 9000 series of quality management standards was revised to increase emphasis on customer satisfaction.

Beginning in 1995, the Malcolm Baldrige National Quality Award added a business results criterion to its measures of applicant success.

Six Sigma, a methodology developed by Motorola to improve its business processes by minimizing defects, evolved into an organizational approach that achieved breakthroughs – and significant bottom-line results. When Motorola received a Baldrige Award in 1988, it shared its quality practices with others.

Quality function deployment was developed by Yoji Akao as a process for focusing on customer wants or needs in the design or redesign of a product or service.

Sector-specific versions of the ISO 9000 series of quality management standards were developed for such industries as automotive (QS-9000), aerospace (AS9000) and telecommunications (TL 9000 and ISO/TS 16949) and for environmental management (ISO 14000).

Quality has moved beyond the manufacturing sector into such areas as service, healthcare, education and government.

The Malcolm Baldrige National Quality Award has added education and healthcare to its original categories: manufacturing, small business and service. Many advocates are pressing for the adoption of a “nonprofit organization” category as well.

Continuous Improvement

Continuous improvement is an ongoing effort to improve products, services or processes. These efforts can seek “incremental” improvement over time or “breakthrough” improvement all at once.

Among the most widely used tools for continuous improvement is a four-step quality model—the plan-do-check-act (PDCA) cycle, also known as Deming Cycle or Shewhart Cycle:

Plan: Identify an opportunity and plan for change.

Do: Implement the change on a small scale.

Check: Use data to analyze the results of the change and determine whether it made a difference.

Act: If the change was successful, implement it on a wider scale and continuously assess your results. If the change did not work, begin the cycle again.

Other widely used methods of continuous improvement — such as Six Sigma, Lean, and Total Quality Management — emphasize employee involvement and teamwork; measuring and systematizing processes; and reducing variation, defects and cycle times.

Continuous or Continual?

The terms continuous improvement and continual improvement are frequently used interchangeably. But some quality practitioners make the following distinction:

Continual improvement: a broader term preferred by W. Edwards Deming to refer to general processes of improvement and encompassing “discontinuous” improvements—that is, many different approaches, covering different areas.

Continuous improvement: a subset of continual improvement, with a more specific focus on linear, incremental improvement within an existing process. Some practitioners also associate continuous improvement more closely with techniques of statistical process control.

Malcolm Baldrige National Quality Award

The Baldrige Award is presented annually by the President of the United States to organizations that demonstrate quality and performance excellence. Three awards may be given annually in each of five categories:

Manufacturing

Service company

Small business

Education

Healthcare

Established by Congress in 1987 for manufacturers, service businesses and small businesses, the MBNQA was designed to raise awareness of quality management and recognize U.S. companies that have implemented successful quality-management systems.

The education and healthcare categories were added in 1999.

The MBNQA is named after the late Secretary of Commerce Malcolm Baldrige, a proponent of quality management. The U.S. Commerce Department’s National Institute of Standards and Technology manages the award and ASQ administers it.

Organizations that apply for the MBNQA are judged by an independent board of examiners. Recipients are selected based on achievement and improvement in seven areas, known as the Baldrige Criteria for Performance Excellence:

1. Leadership: How upper management leads the organization, and how the organization leads within the community.

2. Strategic planning: How the organization establishes and plans to implement strategic directions.

3. Customer and market focus: How the organization builds and maintains strong, lasting relationships with customers.

4. Measurement, analysis, and knowledge management: How the organization uses data to support key processes and manage performance.

5. Human resource focus: How the organization empowers and involves its workforce.

6. Process management: How the organization designs, manages and improves key processes.

7. Business/organizational performance results: How the organization performs in terms of customer satisfaction, finances, human resources, supplier and partner performance, operations, governance and social responsibility, and how the organization compares to its competitors.

*Qutote from「[DOC] The History of Quality – Overview